Membership Inference Test

A method to improves the transparency of Artificial Intelligence training. MINT is a method for experimentally determining whether certain data has been used for training machine learning models

*Work in progress. Demo available offline. Demo paper under review. The online demonstrator and the associated GitHub will be presented soon.

.

How it works

We propose a reformulation of AI privacy analysis methods to be used as an auditing tool to detect potential usage of data without the owners’ consent, which we refer to as Membership Inference Test (MINT).

This demonstrator was developed as an research platform to promote transparency in Artificial Intelligence. In this initial version of the demonstrator, we only include face recognition models.

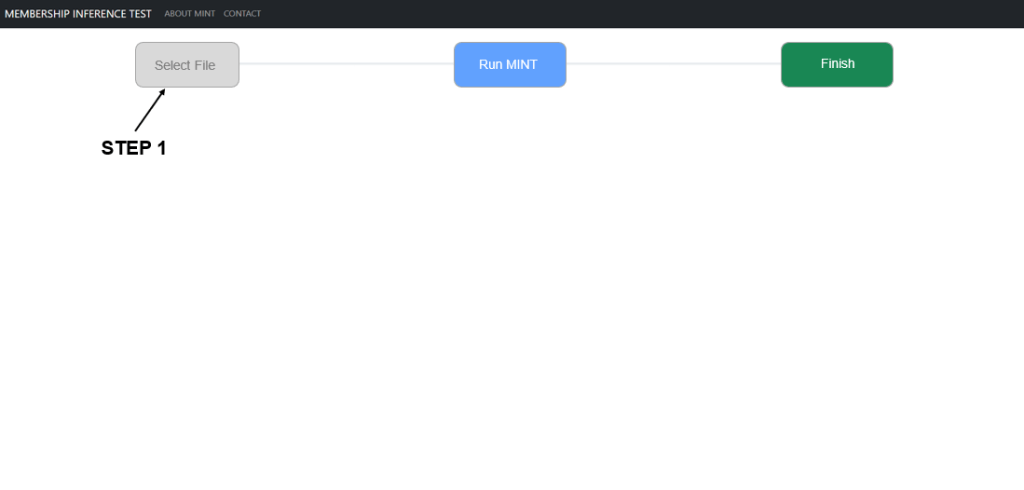

STEP 1: Upload an image.

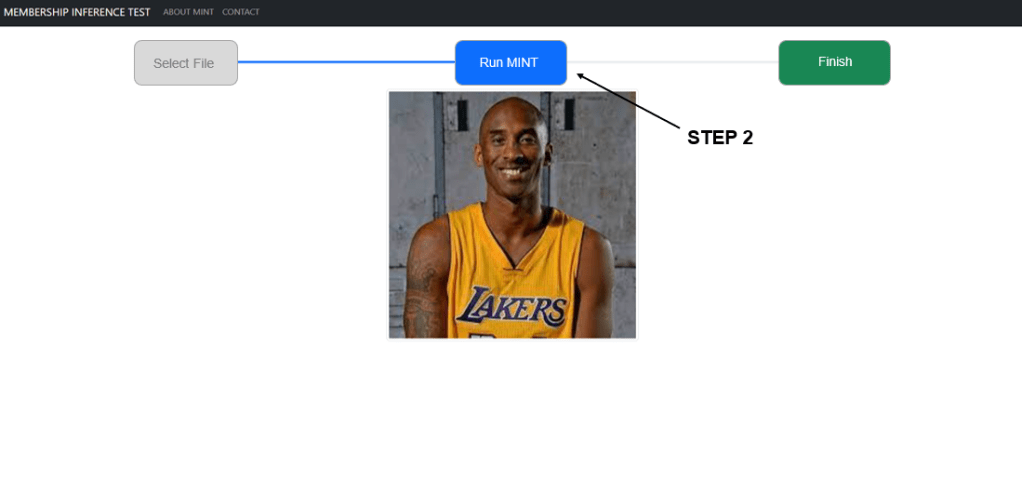

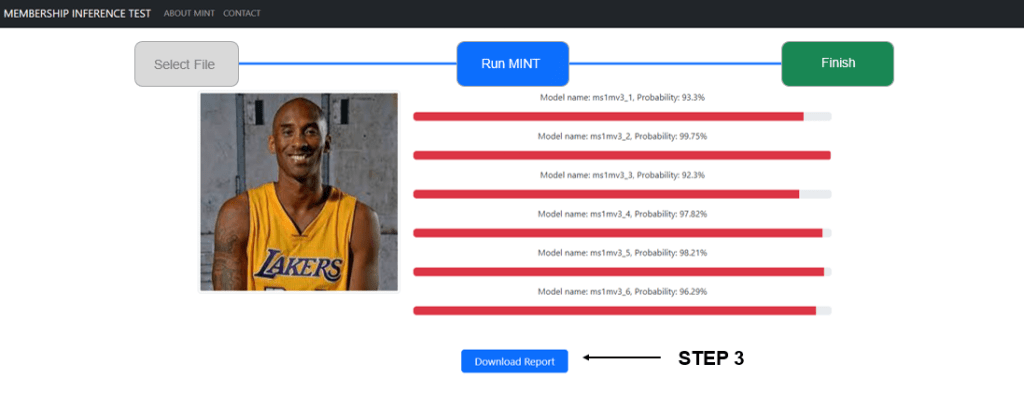

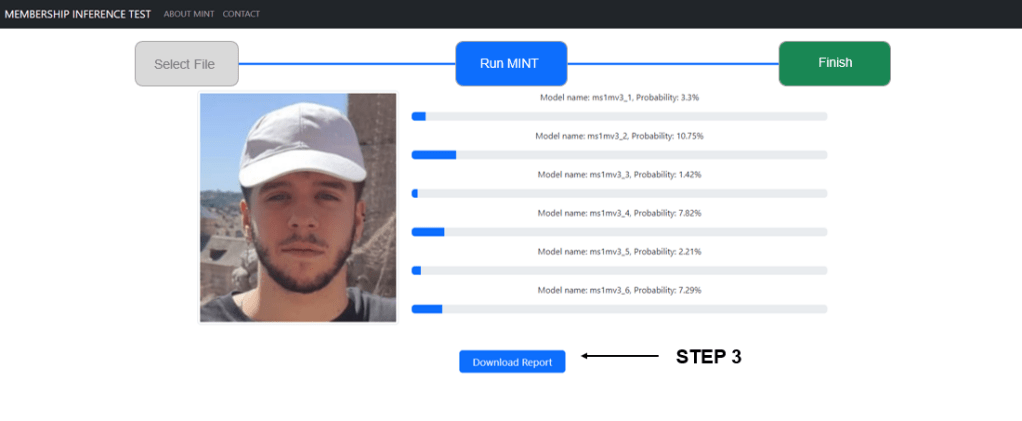

STEP 2: Click on the button “Run MINT”. The platform processes the image using the available MINT models and provides a report that includes the probability of this image being used to train each of these models.

STEP 3: Download the report certifiying the results and the details (date, models, probabilities, etc…).

Theoretical Framework

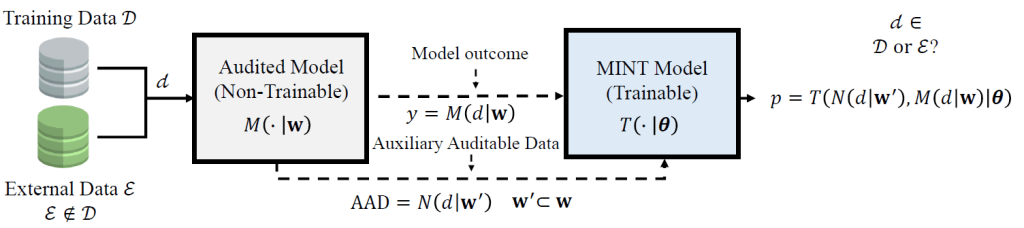

Let us consider a Training Dataset (D), an External Dataset (E) and a collection of samples d (d ∈ D ∪ E). We assume a learned model (M) that is trained for a specific task (e.g.,text generation, Face Recognition, etc.) using the dataset D. For any input data record (d), the model (M) generates an outcome (y) based on d and a set of parameters (w) learned during the training process, i.e., y = M (d|w).

We hypothesize that an authorized auditor possesses access to model M , enabling him the acquisition of information regarding how M processes data d. Alternatively, the auditor may possess information detailing how M has processed data d, even in the absence of direct model access. This information comprises the generated Model Outcome y = M (d|w) and if possible also some intermediate results (e.g., activation maps of specific layers in a neural network). These intermediate outcomes N (d|w′) provide insights about a subset of parameters w′ ⊂ w. We define these intermediate outcomes as Auxiliary Auditable Data (AAD). The auditor does not need the full description of the model M or the values of the complete set of parameters w to obtain the AAD.

The aim of the proposed MINT is to determine if given data d was used to train the model M. To this end, an authorized auditor employs a collection of AAD and/or Model Outcomes y to train a MINT Model T (·|θ) able to predict if a data sample d belongs to the Training Data D or External Data E (E ∈ D).The proposed MINT models exploit the memorization capacity of machine learning processes.

How to add your AI model

Paper under review. In order to guarantee anonymization of the submission, contact information will be available after the review process.

MINTest was developed as a research platform. The usage of the platform is free.

Experiments and Results

For a complete description of experiments and results, please visit our recent arxiv paper “Is my Data in your AI Model? Membership Inference Test with Application to Face Images“.